Being able to automatically recognize notable sites in the physical world using artificial intelligence embedded in mobile devices can pave the way to new forms of urban exploration and open novel channels of interactivity between residents, travellers and cities.

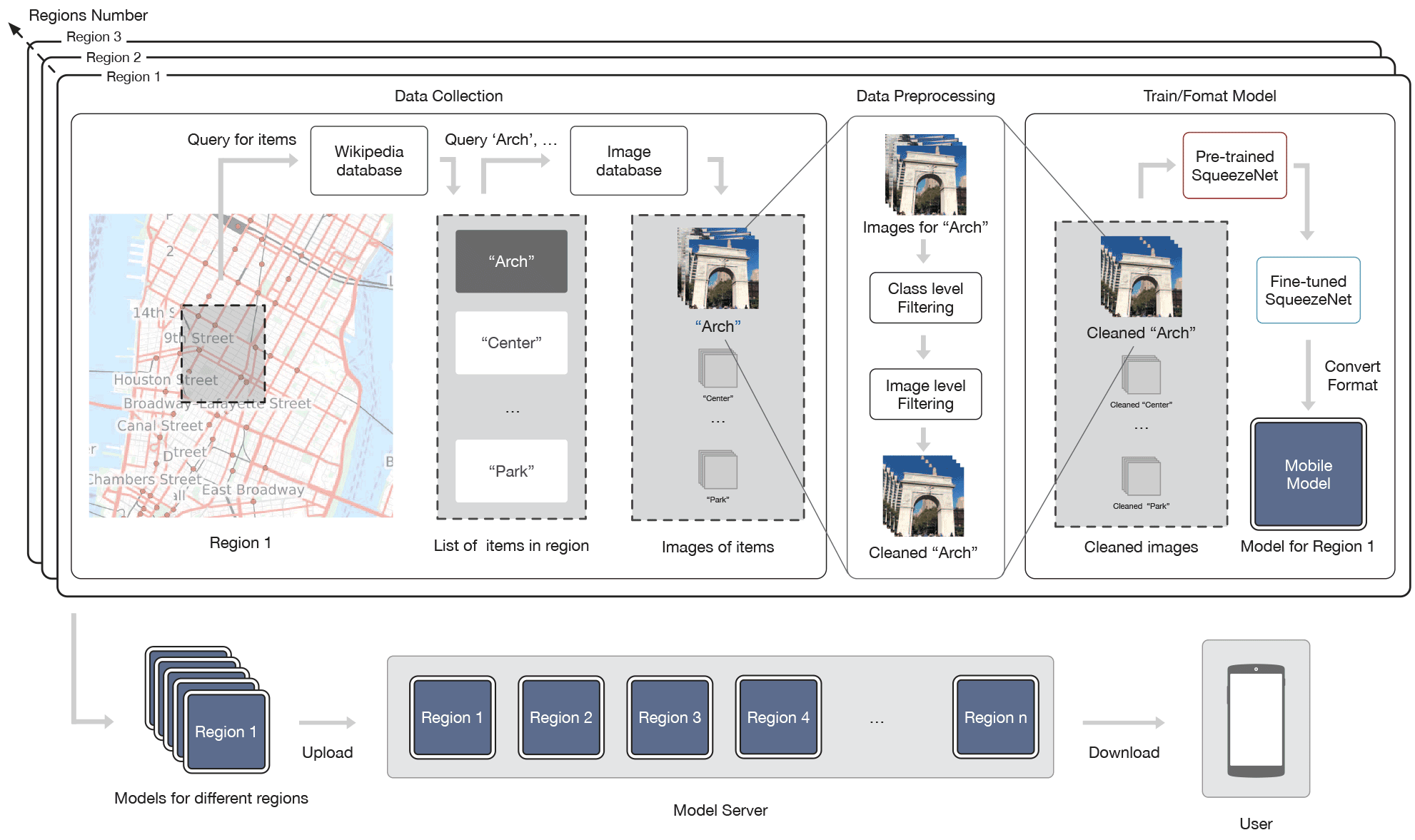

In this work we design a mobile system that can automatically recognise sites of interest and project relevant information to a user that navigates the city. We build a collection of notable sites using Wikipedia and then exploit online services such as Google Images and Flickr to collect large collections of crowd- sourced imagery describing those sites. These images are then used to train minimal deep learning architectures that can be effectively deployed to dedicated applications on mobile devices. By conducting an evaluation and performing a series of online and real-world experiments, we recognise a number of key challenges in deploying site recognition system and highlight the importance of incorporating mobile contextual information to facilitate the visual recognition task.